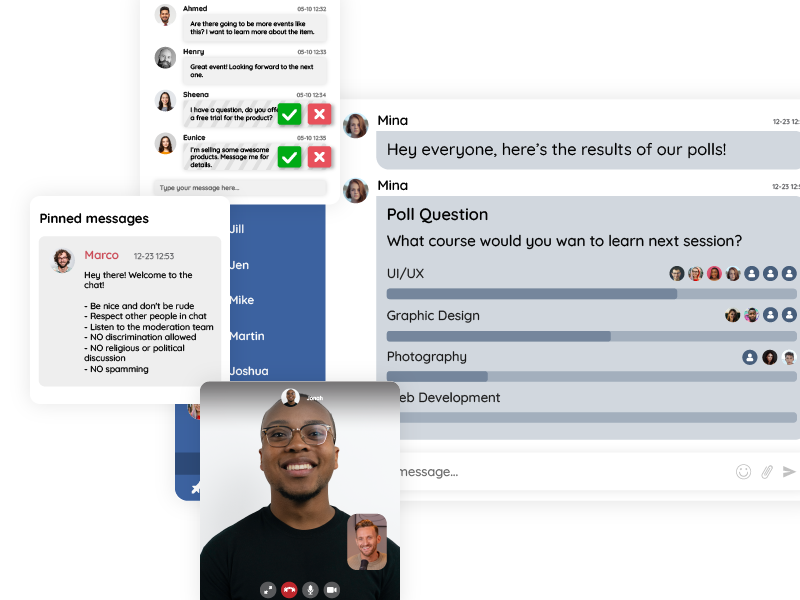

Online communities thrive when people feel safe, heard, and respected. But when a chat grows beyond a few dozen users into the hundreds or thousands, moderation becomes a real challenge. Trolls, spammers, predators, and toxic behavior can quickly disrupt the sense of community. That’s where chat moderation tools come in. For large communities, whether it’s an auction platform, a virtual classroom, or a live event stream, moderation tools aren’t just nice-to-have; they’re essential.

In this article, we’ll break down the features that make chat moderation tools truly effective for large-scale conversations, explore real case studies, and even touch on technical options like SDK and API integrations that make moderation scalable.

Why Moderation Gets Harder as Communities Grow

When you’re running a small chat room, it’s relatively easy to keep an eye on things. A single moderator can manage occasional disruptive behavior. But as your chat grows into the hundreds or thousands of active users, several problems emerge:

- High message volume: Important discussions get buried under spam or irrelevant chatter.

- Bad actors: Trolls, spammers, or even predators may try to take advantage of the community.

- Scalability: One moderator can’t keep up with dozens of conversations happening at once.

- Community trust: Members are less likely to participate if they see offensive or harmful content.

For example, in educational settings, unmoderated chats can create opportunities for predators to exploit vulnerable students. That’s why predator-safe moderation tools, such as user identity verification, keyword filtering, and real-time blocking, are a must for schools and online classrooms.

Simply put: the larger the community, the stronger the moderation tools need to be.

Essential Chat Moderation Tools

Let’s start with the fundamentals, tools every administrator needs in their toolkit to maintain safety and order.

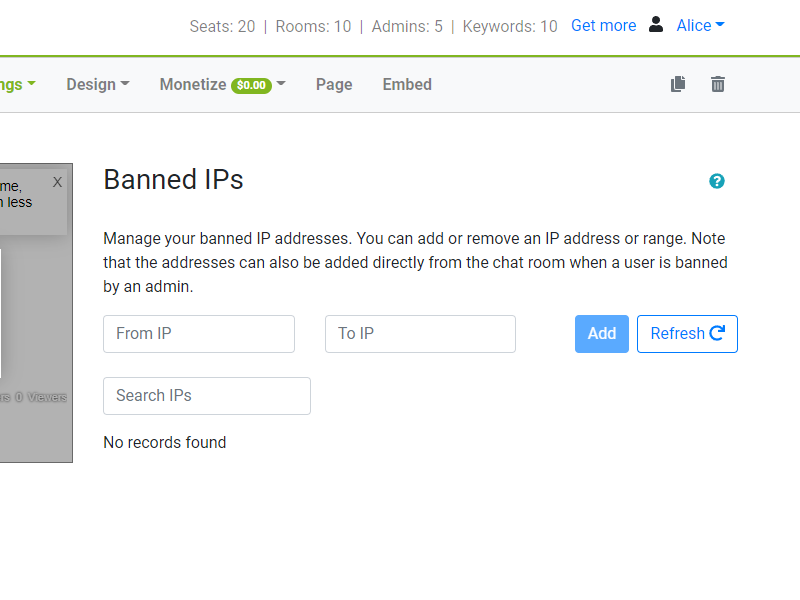

1. Ban a User’s IP Address

Sometimes, warnings and temporary bans aren’t enough. When a user repeatedly breaks community rules or behaves in a harmful way, banning their IP address ensures they can’t return under the same connection.

Why it matters for large communities:

- Prevents persistent trolls from rejoining under multiple accounts.

- Stops predators from continuously creating new usernames.

- Maintains the trust of community members who want a safe space.

Admins can manage banned IPs directly through the admin panel, with the option to unban if needed. This feature is especially powerful for public-facing events where anonymity makes disruptive behavior more likely.

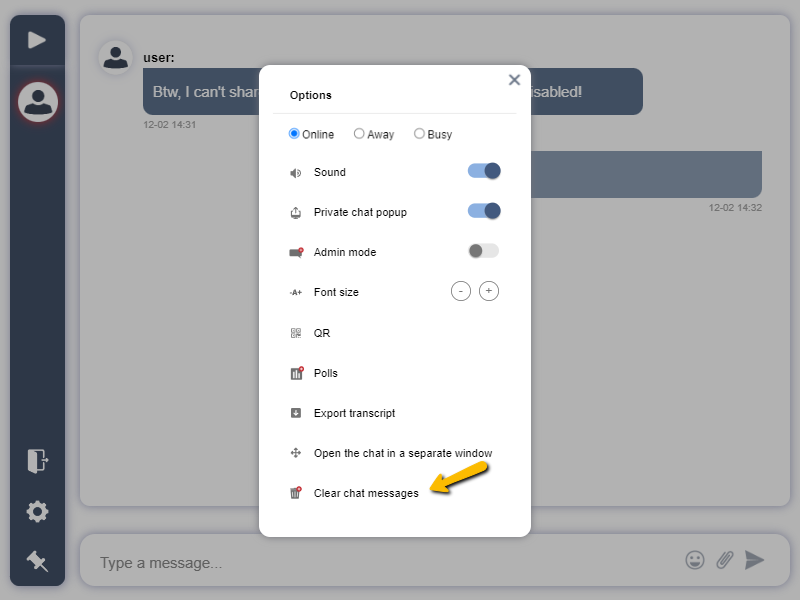

2. Deleting Harmful Chat Messages

Even the most well-behaved communities occasionally generate inappropriate content. The ability to quickly delete messages, either individually or by clearing the entire chat, keeps the environment clean and on-topic.

Use cases:

- Live auctions: Removing offensive bids or irrelevant comments that disrupt the flow.

- Corporate Q&A sessions: Ensuring questions remain professional and on-topic.

- Educational chats: Deleting inappropriate comments before students see them.

For admins, deleting is as simple as clicking a trash bin icon. In fast-moving conversations, this quick control makes all the difference.

3. Exporting Chat Transcripts

Exporting chat history isn’t just about record-keeping. In large communities, transcripts serve multiple purposes:

- Accountability: Keep a record of moderation actions.

- SEO value: User discussions can generate keyword-rich content for your site.

- Catching up: Members who missed a session can review past conversations.

- Proof in disputes: In auctions or trading communities, transcripts provide evidence if disagreements arise.

Admins can export chats in HTML, CSV, or Word format, while members can be allowed to download transcripts for their own use. This transparency strengthens trust in the platform.

Advanced Features for Large-Scale Moderation

When managing hundreds or thousands of users, you need more than just the basics. Advanced tools help moderators scale their efforts.

Role-Based Moderators and Multi-Admin Support

Large communities benefit from multiple moderators with different permissions. Owners can assign roles (owner, admin, moderator) and distribute responsibility across time zones, ensuring 24/7 coverage.

This prevents burnout and ensures no single moderator has too much power.

Automation and AI Filters

Manual moderation can’t keep up with thousands of messages. Automated tools step in by:

- Blocking offensive keywords and profanity.

- Detecting spammy patterns like repeated messages or links.

- Filtering hate speech before it ever appears in the chat.

In predator-safe environments, automated keyword detection can stop harmful grooming attempts in real time.

User Reporting and Community Self-Regulation

Empowering your members to report messages is one of the best ways to scale moderation. When a message is flagged, moderators get alerts and can act quickly.

This is especially important for youth-focused communities, where users may feel safer reporting than confronting inappropriate behavior directly.

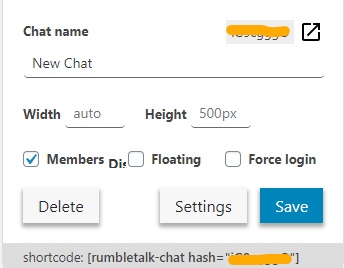

Platform Integration for Seamless Moderation

Moderation becomes much more effective when it’s tied directly to your platform’s user base.

- SDK Integration: Automatically log users into the chat with their platform identity. Moderators can see real names or verified profiles, making it harder for trolls to hide.

- REST API: Programmatically ban users, clear chats, or adjust settings without needing to log into the admin panel. Perfect for developers running large-scale events or platforms.

WordPress Plugin: For membership sites running on WordPress, installing a chat plugin allows moderation tools to be used instantly without coding.

Case Studies: Chat Moderation Tools in Action

Here are some case studies where chat moderation tools were used.

Live Events

A global music festival streamed online drew thousands of simultaneous viewers. Moderators used keyword filters to block spam links and IP bans to remove trolls instantly. This kept the Q&A chat focused on artist interactions.

Online Auctions

In an online auction community, moderators used transcript exports as proof of bids, while spam filters blocked bots from flooding the bidding process. This transparency encouraged trust among bidders.

Educational Platforms

Schools using chat for virtual classrooms relied heavily on predator-safe moderation tools:

- Requiring verified logins through the SDK.

- Enabling moderators to mute or ban inappropriate users instantly.

- Exporting transcripts for teachers to review after class.

This ensured a safe learning space for students, with accountability for both teachers and moderators.

Building Predator-Safe Communities with Chat Moderation Tools

Drawing from the insights of the predator-safe chat blog, here are a few more best practices for protecting vulnerable members in large communities:

- Real identity checks: Linking chat accounts to verified platform logins.

- Continuous monitoring: Multiple moderators in every active room.

- Transparency: Using transcripts and logs for follow-up in case of reports.

- Community guidelines: Clear rules visible to all members so moderation feels fair.

The combination of technical tools and community culture makes large chats safer for everyone.

Building a Safe and Scalable Community

Large communities live or die by the quality of their moderation. Without the right tools, even the most vibrant chat can collapse under the weight of trolls, spam, or unsafe interactions.

The best chat moderation tools combine:

- Core controls like bans, deletions, and transcripts.

- Advanced features like automation, reporting, and multi-admin support.

- Technical integrations (SDK, REST API, WordPress plugin) for seamless scale.

When applied effectively, moderation builds trust, engagement, and growth. It’s not about silencing people; it’s about giving your community the freedom to connect safely.